Stephen Hawking, plus physicists Max Tegmark and Frank Wilczek and AI expert Stuart Russell, have penned an editorial -- Transcending Complacency on Super-Intelligent Machines -- urging caution in research aimed at creating artificial intelligences. As if Hollywood has not already wagged that finger at us, plenty of times, already!

Stephen Hawking, plus physicists Max Tegmark and Frank Wilczek and AI expert Stuart Russell, have penned an editorial -- Transcending Complacency on Super-Intelligent Machines -- urging caution in research aimed at creating artificial intelligences. As if Hollywood has not already wagged that finger at us, plenty of times, already!

To be clear, Hawking does not (as many in media have misquoted him) say that AI in itself would be humanity's "worst mistake." He very clearly states that the mistake would be paying too little attention to our responsibilities in the creation process. Taking insufficient care to get it right.

I know many members of the AI research community and this topic is widely discussed. But how do you take 'precautions' to keep new cybernetic minds friendly, when

(1) we don't know which of six very different, general types of approaches to this problem might eventually bear fruit and

(2) it is clear that the one method that cannot work is "laws of robotics" of Asimov fame.

(1) we don't know which of six very different, general types of approaches to this problem might eventually bear fruit and

(2) it is clear that the one method that cannot work is "laws of robotics" of Asimov fame.

I explore all six approaches in various works of fiction and nonfiction. One of the six approaches -- the one least-studied, of course -- offers what I deem our best hope for a "soft landing," in which human civilization and some recognizable version of our species would remain confidently in command of its own destiny.

At the opposite extreme is this possibility: AI might arrive that's deliberately designed to be predatory, parasitical, ruthless and destructive, in its very core and purpose. Alas, this is exactly the approach that is receiving more funding - and in secret - than all other forms of AI combined. And no, I am not talking about the military!

== How it all got started ==

Let's move back to a much earlier stretch of this road. A fun article in Time Magazine (online) celebrates the 50th anniversary of the clunky but epochal programming language BASIC. Computer pioneer Harry McCracken traces the history of BASIC, how it was designed to provide a portal for average students to access programming, and how it helped to launch both the PC revolution and the Microsoft empire.

Let's move back to a much earlier stretch of this road. A fun article in Time Magazine (online) celebrates the 50th anniversary of the clunky but epochal programming language BASIC. Computer pioneer Harry McCracken traces the history of BASIC, how it was designed to provide a portal for average students to access programming, and how it helped to launch both the PC revolution and the Microsoft empire.

McCracken cites my famous (or infamous) SALON article -- Why Johnny Can't Code -- about the demise of programming language accessibility in our modern computers -- how this had a tragic and harmful side-effect, eliminating from school textbooks the shared experience of introductory programming exercises that -- for one decade or so -- exposed millions of gen-Xers to small tastes of programming. Far more than almost any millennials receive.

And no, I was not praising BASIC as a language, but rather the notion that there should be SOME kind of “lingua franca” included in all computers and tablets etc. For a decade, Basic was so universally available that textbook publishers put simple programming exercises in most standard math and science texts. Teachers assigned them, and thus, a far higher fraction of students gained a little experience fiddling with 12 line programs that would make a pixel move… and thus knew, in their guts, that every dot on every screen obeys an algorithm. That it’s not magic, it only looks that way. Apple and Microsoft and RedHat could meet and settle this in a day --after which textbook publishers and teachers could go back to assigning marvelous little computer exercises, a great way to introduce millions of kids to… the basics.

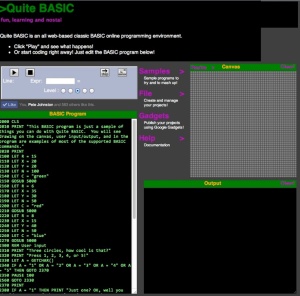

For those who want the simplest way to re-access BASIC, one of the readers of my "Johnny Can't Code" article went ahead and provided a turnkey, web-accessed module that you can use instantly to run old (or new) programs.

For those who want the simplest way to re-access BASIC, one of the readers of my "Johnny Can't Code" article went ahead and provided a turnkey, web-accessed module that you can use instantly to run old (or new) programs.

Have a look at "QuiteBasic"… one of the coolest things any of my readers were ever inspired to produce.

Alas, word has not reached the textbook committees. In a modern era when computers run everything, far fewer kids are exposed to programming than in the 1980s and 1990s. Are you really okay with that?

== Speaking of tech anniversaries ==

John Naughton, published a fascinating article for the Observer, "25 things you might not know about the web on its 25th birthday." The big picture perspectives are important, but one especially stands out to me:

Number 18 -- The web needs a micro-payment system. "In addition to being just a read-only system, the other initial drawback of the web was that it did not have a mechanism for rewarding people who published on it. That was because no efficient online payment system existed for securely processing very small transactions at large volumes. (Credit-card systems are too expensive and clumsy for small transactions.) But the absence of a micro-payment system led to the evolution of the web in a dysfunctional way: companies offered "free" services that had a hidden and undeclared cost, namely the exploitation of the personal data of users. This led to the grossly tilted playing field that we have today, in which online companies get users to do most of the work while only the companies reap the financial rewards."

Number 18 -- The web needs a micro-payment system. "In addition to being just a read-only system, the other initial drawback of the web was that it did not have a mechanism for rewarding people who published on it. That was because no efficient online payment system existed for securely processing very small transactions at large volumes. (Credit-card systems are too expensive and clumsy for small transactions.) But the absence of a micro-payment system led to the evolution of the web in a dysfunctional way: companies offered "free" services that had a hidden and undeclared cost, namely the exploitation of the personal data of users. This led to the grossly tilted playing field that we have today, in which online companies get users to do most of the work while only the companies reap the financial rewards."

I've been working for some time on innovations that (in collaboration with others) could utterly transform the web (and incidentally save the profession of journalism) by making micro payments workable and effective. Got patents too! That and $3.65 will get you a small cappuccino.

== Is the West - especially America - just weird? ==

A large fraction of social science research has focused on the most-accessible supply of survey and test subjects -- westerners and especially Americans -- under the assumption that "we're all the same under the skin."Hence tests that dive below obvious cultural biases ought to show what's basically human. But lo and behold, it seems that westerners and especially Americans are "weird" compared to almost all other cultures, in ways exposed by simple Prisoners' Dilemma type games and many others that study - say - individuality.

A large fraction of social science research has focused on the most-accessible supply of survey and test subjects -- westerners and especially Americans -- under the assumption that "we're all the same under the skin."Hence tests that dive below obvious cultural biases ought to show what's basically human. But lo and behold, it seems that westerners and especially Americans are "weird" compared to almost all other cultures, in ways exposed by simple Prisoners' Dilemma type games and many others that study - say - individuality.

In fact, anyone who compares western - and especially American - values, processes, history and so on, compared to almost any other culture that ever existed, would have been able to tell you that. (Californians are even weirder! Ask Robert Heinlein.) The question is: "weird" as in dangerously unhinged? Or in the sense of "our one chance to escape the traps that mired every other civilization into a pit of dashed hopes and potential?" The jury is still out.

== Art and theology? ==

Speaking of cryptic time messages… Charles Smith (and some others) pointed out a fascinating aspect of The centerpiece image in Michaelangelo's Sistine Chapel paintings… "Apparently, Michelangelo placed god and various angels within what is clearly the outline of a human brain. Am I imagining this? Maybe, but I doubt it. Having dissected many cadavers, he knew his anatomy. He was trying to tell us something about the human mind and its relationship to the idea of god… the idea that man created god in his own image."

Speaking of cryptic time messages… Charles Smith (and some others) pointed out a fascinating aspect of The centerpiece image in Michaelangelo's Sistine Chapel paintings… "Apparently, Michelangelo placed god and various angels within what is clearly the outline of a human brain. Am I imagining this? Maybe, but I doubt it. Having dissected many cadavers, he knew his anatomy. He was trying to tell us something about the human mind and its relationship to the idea of god… the idea that man created god in his own image."

It's even better. The deity's legs are sticking out through the cerebellum and his hand of creation emerges from the prefrontal lobes.

Aw heck, let's stay with "theology" for a while, this time in quotation marks because it is the raging foolish kind. Mega best-sellers are out there proclaiming portent in the "blood moon."

Astronomers apply no significance to the way lunar eclipses - or 'blood moons" - sometimes come in groups of four or "tetrads. But that has not prevented a spate of blood moon mysticism. The four eclipses in this tetrad occur on April 15 (last month) and Oct. 8, 2014, and April 4 and Sept. 28 next year… all of them occurring during Jewish holidays! Which has provoked a bunch of Christian (not Jewish) mystical last-days mongering. Both of the ones in April occur during Passover, and the ones in October occur during the Jewish Feast of Tabernacles.

I'll leave it to you to find these sites (Google "blood moon prophecy") but at one level it is just more of the dopey millennialism I talk about here… wherein meme-pushers sell the sanctimony drug-high of in-group "knowledge" to a portion of the public who do not like the very idea of the Future and yearn for it to just go away.

I'll leave it to you to find these sites (Google "blood moon prophecy") but at one level it is just more of the dopey millennialism I talk about here… wherein meme-pushers sell the sanctimony drug-high of in-group "knowledge" to a portion of the public who do not like the very idea of the Future and yearn for it to just go away.

In this case, the "coincidental" overlap of lunar eclipses with Jewish holidays is not "one-in-tens-of-thousands(!)" but almost one-to-one. Because the Jewish High Holy Days are defined as starting on the full moon - the exact time when lunar eclipses occur. Also, lunar eclipses always happen near the vernal and autumnal equinoxes, when the moon's orbit is most closely aligned with the Earth-Sun plane. Hence, of course clusters of full lunar eclipses will line up with those two holidays. It is not "coincidence" or a "sign" but rather a matter of deliberate design… exploited by these "blood moon" predators, preying on the gullible.

The good news? This will pass. Alas, it will be replaced by the next nonsense, then the next. Till we persuade our neighbors to stop hating tomorrow.

== Science Miscellany ==

University of Washington (UW) graduate Thomas Larson is developing a lens that will turn any smartphone or tablet computer into a handheld microscope that magnifies by 150 times.

Wow. A supernova that just happened to be magnified by a gravitational lens of an intervening galaxy.

Fascinating. We assumed all brain neurons needed uniform sheaths of myelin to perform well. Apparently not!

Ancient shrimp had a cardiovascular system 520 millions years ago, earlier than any other known creature. The fossil dates back to a period when the "Cambrian Explosion" was taking off. Possibly the one time aliens DID meddle with our planet… by flushing a toilet here?

Interesting question: Could playfulness be embedded in the universe?

Fascinating news about the importance of the Y Chromosome. Huh. Maybe males aren't about to go away, after all. Sorry about that.

52 comments:

In a modern era when computers run everything, far fewer kids are exposed to programming than in the 1980s and 1990s.

This is simply not true.

You're looking at the past with rose colored glasses and from a perspective of privilege. [i]Most[/i] kids did not possess the means, in the 80s/early 90s. I know, because, I am a Gen-Xer that yearned for this, but did not have the financial means (nor availability) until college to pursue. But anecdotes aside, just look at PC ownership figures -- they don't tip above 50% until the 21C.

Today, programming is a tap or click away. And you can easily engage in the discipline with a panoply of resources and more importantly, knowledgeable guides to advise you. The problem is more one of desire, or lack of desire.

Naum, you don't get it. Sure " you can easily engage in the discipline with a panoply of resources"… and the few who do that have a vast (and confusing) plethora of options, if they are motivated.

But that is not what I was talking about -- the motivated. I have the textbooks from the 90s right here and yes, there weren't as many homes with computers… so some of the students had to do the TRY IT IN BASIC assignments in the school's computer lab. So?

Those TRY IT IS BASIC exercises and turn-key access to BASIC meant that millions of kids got to at least poke at a very single level of programming… a level that could come back, within a day of Msof and apple deciding to make it so.

"a level that could come back, within a day of Msof and apple deciding to make it so."

You misspelled "Google".

A simplified app development language ("Simple") and fully sandboxed runtime environment ("Simple Box") available on Android devices, and a way of freely trading Simple Apps without going through the app-store. Not all device functionality would be available in the RTE, but it would be much easier to write code that works across all devices. And, IMO, it would fit Google's apparent psychology much more than Apple's.

"In a modern era when computers run everything, far fewer kids are exposed to programming than in the 1980s and 1990s."

Like Naum, I really think you overestimate how many kids ever looked twice at those '80s text-book exercises, and drastically underestimate how many kids are exposed to coding today through modding, website scripting, and other sources.

"there should be SOME kind of "lingua franca" included in all computers and tablets etc."

It's called javascript. It's on everything.

[HTML is the equivalent of writing ".bat" files, and JS is the equivalent of BASIC.]

The eye may process and predict movement, sending to the brain not only an image of what is happening, but a rough estimate of what will happen next.

http://www.npr.org/2014/05/05/309694759/computer-game-aides-scientist-mapping-eye-nerve-cells

[Also they recruited over 100,000 people to map out a mouse retina by turning it into a game.]

If this is repeated in other types of nerves, perhaps the "brain" actually extends throughout the whole body. The thing in your head is just a particularly dense clump. (That came out wrong.)

Paul, you still neglect to follow. Most of the app-making programming languages never take you down to the layer of fundamental algorithm and making pixels obey them.

Um. 'Compaclency'?

You can certainly get languages and use them to handle algorithms. I agree that graphics have been heavily abstracted.

Yes, I have fond memories of poking data into the 6K area of RAM used to store the graphics display on my Sinclair ZX Spectrum. Trouble is the hardware is far too complex to allow that now. Even then, it was a mystery as to how those 6K of RAM was converted to glowing phosphors.

I'm with David over the programming issue, but from another perspective. The university students I teach are the "digital natives" yet that seems to mean comfort with social media applications. They are "number blind" (cannot determine if their calculator answer is correct) and cannot even use a spreadsheet to solve simple problems. I note the UK is going to reintroduce coding in schools. From the snippets I've seen, it looks like HTML + Javascript and Python are the preferred languages.

Regarding Michelangelo's "Creation of Adam". Seeing God inside a brain is one person's interpretation. Another interpretation, that looks more salient, is that it is a uterus. This picture seems more supportive of the latter interpretation.

Interesting dept. Research is suggesting that it is the protein GDF11 that is the active factor in experiments that "rejuvenate" old mice when given young nice blood. The protein is the same as in humans. While not life extension, rejuvenation would certainly be one very significant medical treatment. Much simpler that the treatments in Sterling's "Holy Fire". We'll see if this pans out in clinical trials.

A core characteristic of the Internet is as a transaction landscape...

Forget money for a moment... but all those photos, tweets, status updates, comments, likes, shares, etc... are TRANSACTIONS!

The miracle of the Internet is that it has essentially brought the cost of these transactions to near zero... and became a proximity substitute (overcoming distance)

This also can and must be done for money.

While I've found it curious and frankly surprising how few understand its import... (most think that its either "nice but irrelevant" or "impractical and dangerous".)

Well once its here it'll quickly be recognized as much more important than tweets, texts and the rest... though I'll concede that it'll have its issues.

Small money and large numbers is a very, very big deal.

Re: WEIRD brains. Good see that research is showing that culture is a determinant of how brains develop and how people see their world. Stripping away culture suggests that research into more fundamental cognition needs to focus more on our animal relatives.It also suggests that as we have known foo a long time, that education is important. Religious fundamentalists understand this which is why they so often resist it for their children.

@Tom Crowl

A core characteristic of the Internet is as a transaction landscape...

The miracle of the Internet is that it has essentially brought the cost of these transactions to near zero... and became a proximity substitute (overcoming distance)

This also can and must be done for money.

This does not logically follow. There are other ways to provide the service, e.g. as a public one paid for with taxes.

As a journalist I've lamented the fact that we don't have micropayment systems already in place. If my smart transit card can deduct just two dollars at a time from a $20 reservoir saved to my card one step removed from my bank account, then why can't we pay a fraction of a penny for every article we read. We used to pay 25 cents for a newspaper, surely people would pay a penny or less per article. As David says it could save journalism, providing a funding source both for large organizations such as newspapers and speciality journalists working on their own.

@Mel - micropayments will not save journalism and the reason why has been researched. While it seems that users might be willing to pay for content, one problem is that the user must be able to evaluate whether it is worth paying and then making a payment. This costs effort that is not worth the time for each tiny payment. What will happen is that charges will drive readership away to free material, of which there is an abundance. If you have a readership, probably advertizing revenue will be a better solution.

Mel B, a number of us have been working on micro-payments proposals. I believe I have the "secret sauce" that would make it work. email me separately and I'll send you my draft.

I think the BASIC essay / rant / concerns were valid when it came out.

But the Maker movement in general and the Raspberry Pi in particular have changed everything.

If you want to teach kids programming, you get them (or loan them) a Raspberry Pi and have them learn Scratch and/or Python.

But that is not what I was talking about -- the motivated. I have the textbooks from the 90s right here and yes, there weren't as many homes with computers… so some of the students had to do the TRY IT IN BASIC assignments in the school's computer lab. So?

Most kids didn't "TRY IT IN BASIC" -- they simply turned the page in the textbook and most schools (yes, I know computers proliferated into classrooms in the 90s, but most teachers were oblivious or numbers simply not great enough -- it's called a "PC" for a reason :)). But even at that, it's a FRACTION (both nationally and globally) of the kids today that have available at a click or tap, at programming.

The question or issue is the same now as then, one of desire.

On micropayments, it all makes sense, but why hasn't it come to be? Maybe it doesn't make sense, as this has been lamented and tried since the dawn of the WWW in the 90s.

Vampire Therapy!

Scientists found they could reverse some signs of aging by doing frequent transfusions with blood from a younger specimen.

Somebody owes Lady Bathory an apology.

Stefan and Naum the question might be "of desire.' But in those days the "desire" could be that of a teacher, demanding that all students either do the programs at home or in the computer lab.

And some school districts required it in all schools. And I give up. This concept just does not come across to you DIY self-motivated types … that most human kids do not reach out beyond what is demanded of them.

Re: the WEIRD Americans -

Perhaps these folks need to read your article about the Dogma of Otherness... :-)

Announcement ! I am telling you blogmunity fellows first: I have five memberships to Loncon3, the world science fiction convention - August 14- 18 in London UK -- that I must dispose of, at reasonable discount from the rate at the door.

http://www.loncon3.org/

Now's your chance to get reasonable-priced passes to the greatest sci fi show on Earth… in merrie olde England.

David,

"And I give up. This concept just does not come across to you DIY self-motivated types … that most human kids do not reach out beyond what is demanded of them."

[laughs] I think the problem isn't that we disagree that most kids won't access online programming resources unless someone makes them, we're disagreeing that "things were better in my day". This may be another WEIRD issue - you may have had a very rarefied experience in the 80's & 90's. Most of us, even nerdy "computer-types", didn't have schools that pushed BASIC, didn't have text-books that had BASIC examples (and if they did, we didn't do those exercises even if we were learning BASIC from other sources. From memory, I only ever copied code from computer magazines.)

That's entirely separate from the issue of whether kids would be better off with a greater understanding of the guts of their many many computer devices, with experience writing the magic spells to pushing raw pixels around, and with experience thinking algorithmically.

So I'd not object to a push for introducing abstract algorithms in maths. And a "computing and robotics" class for hardware theory and coding. You've got them for 12 years, it should be possible to go from Lego to Raspberry Pi, move-by-move simplified robotics to JS over HTML online or python/etc. Plus optional electives in design, modelling, Office-ware, game making, 3d-printing, analogue and digital electronics, etc, etc.

But...

...This would not be "returning to how things used to be". It would be a whole new thing we'd be introducing to the world.

I worked with my 12 year old daughter on a science project using an Arduino micro controller. This required some cut and paste style modifications to the C++ / C programs. Now we are moving on into programming wholesale for more complex projects. We are working out of "Programming Arduino: Getting Started with Sketches," and are having fun so far. I think that starting off with an Arduino is an excellent way to build up to larger concepts. You get to manipulate hardware, you get to bemoan programming bugs. All fun.

[laughs] I think the problem isn't that we disagree that most kids won't access online programming resources unless someone makes them, we're disagreeing that "things were better in my day". This may be another WEIRD issue - you may have had a very rarefied experience in the 80's & 90's. Most of us, even nerdy "computer-types", didn't have schools that pushed BASIC, didn't have text-books that had BASIC examples…

Nailed it.

But interest in programming is a self-desire kind of thing -- I remember a few years ago, buying a few OLPC machines and gave them to friends (including one who was heading overseas on a missions trip). Their kids shunned these things, yet take to tablets like nothing else (including the very young, for whom the touch UX has greater attraction than just about any tech (or low-tech) ever). Yet there is a small contingent that aspires to poke and prod and tinker and build…

Only tangentially related, but reading *The Dream Machine: J.C.R. Licklider and the Revolution That Made Computing Personal* (a fascinating read, mainly focused on J.C.R. Licklider, an instrumental figure in history of computing, that most probably never heard of, but also traverses the arc of networked computing and computing advances in general). In one of the chapters, the quest to discover what made a programmer/where programmers could be recruited back in the 50s/60s was intriguing -- first, they went after math students/scholars, but it turned out that the correspondence here was far from 1:1, with many not cut out for programming, despite the common sense logic. Then, chess mavens but that was not a hit. Then, they (government/scientists at DARPA or whatever it was called before that, etc.) looked at other demographics, but nothing seemed to fit other than a small % of people possessed an inclination and interest for programming computing machines. Yes, I realize the BASIC question was directed toward general science interest (what makes the machine come alive so to speak), but talk of this always harkens me back to this narrative for some reason.

Naum,

"first, they went after math students/scholars"

...but I did nothing, for I wasn't a math student... (Ahem. Sorry.)

"a small % of people possessed an inclination and interest for programming computing machines."

This is why I think reintroducing "computing/programming" into schools shouldn't be an attempt to go back to a mythical era of BASIC. Or one universal language to rule them all. By having hardware and software, and having design and modelling, you are appealing to the Maker-instinct. IMO, that's what kids got out of writing BASIC, out of seeing bits pushing pixels. But not every would-be Maker is interesting in the technical nature of coding. Fortunately, modern computing has everything from robotics, circuit design, to animation, modelling, and 3d-printing. We can sneak in through many doors, appeal to many types. Unleash the creativity of those who would have no interest in coding. Turn them from passive swipers of locked-down machines to active creators.

And, David, isn't that the real goal?

IMO, any kid who would have, back in the day, gotten into programming through BASIC is today already getting into it through modding and scripting, or through robotics kits or Arduino boards. They aren't the ones we lost. We lost the knitters and carvers, the drawers and moulders, the drillers and wrenchers. Those are the ones we need to introduce to the vast array of creative means at their disposal, now that the traditional means have been largely lost or locked down.

Paul… very interesting! Clever… tho as you say, the opportunity may be passed.

I have a different approach….

Okay, that was weird.

Ok, those Nigerian terroist guys who are on tv bragging about how Allah wants them to kidnap and sell girls...

What am I missing. I understand crimes of passion, crimes of profit, crimes of opportunity, even out-and-out sociopathy. But I don't understand what makes real-life people reimagine themselves as full-blown supervillains.

Way back in the 70s when my brother and I were teenagers, full of confident "maturity", we laughed hysterically at a rant by Captain America comic book villain The Red Skull: "Wherever there was tyranny, ruthlessness, injustice, The Red Skull was there, praying on the weak and helpless!" What a bunch of rubes, we thought. No one, not even a Nazi supervillain would actually say of think something like that about himself.

Guess I owe Stan Lee and Jack Kirby an apology.

Dr Brin,

Concerning your disdain for Luke Skywalker's "plan" to rescue Han Solo...

Ok, maybe my enjoyment of that part of the film is colored by nostalgia. I can't explain why I like the 1960s "Batman" tv show to anyone who doesn't share the feeling, and I suspect my enjoyment of the first part of RotJ is similar.

But let me also suggest that your dismissal of that "plan" is based on your buying into the notion that Luke is some sort of chosen ubermench. If, on the other hand, Luke really is just a teenaged boy barely off the farm, the fact that he was long on bravado and short on planning might make perfect sense.

LarryHart Goebbels openly proclaimed "we are the barbarians."

Re the opening to JEDI. Luke is a high commander in the second most powerful military force in the galaxy. He is also heir to the entire Jedi Council. If he wants to borrow a few X wings to surround Jabba's castle and make him release another rebel hero… um… who's gonna say no?

And maybe while they're at it capture the bounty hunter?

Sorry, it is both obvious and simple that would be his first plan. Dig it… that would take maybe two minutes… then the Empire shows up and Luke sends his pals away to safety and he is left in the lurch to fall back on Plan B… at which point you've changed just two minutes and the rest of the first act can proceed as before!

Only in that case he is not a flaming idiot.

I've learned a few things about where the schools are going and where they could be going since we last had this round.

Like you, David, I don't care about the programming language in use to teach algorithmic mathematics. BASIC is a good choice, if it's a modern variant, but Python or whatever else is also fine. As long as it's not C or C++.

But what I've been able to see is that the schools, perhaps too late for this cohort of high schoolers, intend to put the tools in every kid's hands where they can. They call them "1:1 initiatives", meaning that, for the instructional time at least and 24/7 at best, each child has the same access to computer technology as the wealthiest child, with that technology in active use in teaching and learning in the schools.

So the trajectory on this is positive, and, done right, it's going to give a huge population of people an open invitation to "try it in " along with teachers who actively urge it. A reason for optimism.

Also, I'm a backer on the Micro Phone Lens! Really looking forward to that little gizmo.

An unhealthy emphasis on competition lies at the heart of both David's programmers (makers) versus end-users (consumers) argument and the AI controversy as our society uses & abuses the idea of competition to actively discourage the expression of individual creativity, originality & independent thought.

This is the price we pay when we rush to separate adequate from good, good from better and better from best: We create a rigid hierarchy (a barrier) that cannot be overcome, by & large and/or 'on average', and we see this discouragingly restrictive (social) pattern repeated over & over.

Gone are the days of 'adequate' when every individual, amateur, fixer, farmer, manufacturer, craftsman, journalist, musician, athlete & thinker could survive on the basis of being 'good enough'. Every little town had its own academic, artist, photographer, expert, newspaper, chamber group, council, orchestra or potential 'Olympian'.

But this was before an unhealthy emphasis on national & global competition dismissed these amateurs as 'substandard', closing the little factories as inefficient, mocking the small-town expert as a 'bumpkin', dismissing the local scientist as a 'dabbler', denigrating the local musician as 'amateurish', comparing the unpolished athlete to the most accomplished professional.

And you wonder why the public (in general) appears uninterested in government, science, technology, music, mathematics, programming & art?? For the majority of us, the only way to win this game is not to play because we (the majority) must lose when we choose the best.

This is especially true for 'thinkers' like Stephen Hawkings when & if we invent AIs to do our thinking for us.

Best

LarryHart:

Last I saw the human trafficking industry was worth about $32B USD a year. If you want to finance your groups operations, nabbing a few girls and selling them can produce the cash you need. There are ready buyers and customers for those who buy them.

It's not hard to imagine the future these girls face.

My brother and I programmed his Commodore to move pixels in orbits. We kept track of vectors. Nowadays kids would be bored with pixels, but could program similar with a cool ship instead of a pixel - the math would be the same. As well as challenging them to do stuff like have a game character fall off a cliff realistically at 60 frames per second, with the acceleration of gravity. To put all this another way, one great way to learn something is to teach that subject. If a kid can teach a computer to do it, she will learn it herself.

Jumper I still think it's important to start with pixels because no screen will ever look the same. But yes, graduate rapidly from there.

Geez! Locum is in cogent and intelligent form today! He makes a clear and incisive argument, with many telling point. Please, man, eat whatever it is you ate today.

Were I pushing for dog-eat-dog competition, without compassion or humanity, that point might be valid. But that has little to do with my meaning. Robert Heinlein in BEYOND THIS HORIZON made clear that his idea society would be socialistic re every human need…

…but flaming competitive in every field involving human creativity. Because it's been shown clearly that the arts, sciences, and creative services like restaurants etc flourish best in flat-open-fair competitive mode.

and that goes triple for public policy

Alas that is all I have time for.

@Alfred Differ:

I'm old enough to understand that financial gain will drive people to commit evil acts. More's the pity, but it's comprehensible.

The part I don't understand is the appearing on tv all but twirling the mustache and going "Mwah, hah, hah, I'm EVIL!" That's the part I don't undersand anyone actually playing in the real world. To what end?

Dr Brin:

Re the opening to JEDI. Luke is a high commander in the second most powerful military force in the galaxy. He is also heir to the entire Jedi Council. If he wants to borrow a few X wings to surround Jabba's castle and make him release another rebel hero… um… who's gonna say no?

I guess it's the fact that Luke has risen so far so quickly in the military hierarchy that I find implausible, not the fact that he fails to live up to the rank.

eI wonder what our host thinks of this: http://blog.ucsusa.org/the-secret-science-reform-act-perhaps-we-should-just-call-it-catch-22-417

Concerning BASIC, have a look at www.quitebasic.com a web-based BASIC environment, and when they hit the limits of BASIC, Apple's still not charging for Xcode...

LarryHart:

Do the real super-villains see themselves as evil, though? Look through the best comics again and I think you'll see the ones with developed characters never do. Their vision drives certain behaviors, but the ends always justify (morally) the means... somehow.

My personal measure of another's level of fanaticism is how they rationalize the means they use. I don't mind the end goals so much as they are often ideals that can never be achieved, but the path toward them CAN be walked. When the cowboy rides off into the sunset, he never quite gets there, but he does manage to trample the cactus along the way.

I learned Basic on a PDP in the late 70's using a teletype, so moving pixels wasn't an option for me. What initially drew me in was the power of changing the text that appeared in front of me. Simple 'hello world' programs turned into text patterns and decision aids. What really hooked me, though, was being able to do crude drawins on paper of the math functions I couldn't easily visualize.

I'm not knocking pixel moving as a motivator, but even a batch mode approach to these tools can hook kids. It was enough for me to chase opportunities to learn more and even figure out how to get a loan from my credit union (when they shouldn't have even given me the time of day) to buy my first Commodore PET. It was only after I owned my own that I got to poke pixels around.

Tim H Quitebasic was built at my behest!

Joe Roth thanks: My response to the proposed “Secret Science Reform Act” considered by the House Science, Space and Technology Committee.

The Act would require the Environmental Protection Agency to make public all data, scientific analyses, materials and models before promulgating any regulations. Because EPA makes rules based on current scientific understanding of the impact of pollution, this proposed law would prohibit the agency from moving forward with regulations unless all that information was openly available, which it often isn’t for a variety of reasons. There is no accompanying requirement for industry to make data public for use by the agency, nor a requirement to waive the privacy rules that often accompany public health data, nor a waiver of trade secrets or intellectual property. In fact, the same bill clearly states that EPA may not publicly disclose any such information.

http://blog.ucsusa.org/the-secret-science-reform-act-perhaps-we-should-just-call-it-catch-22-417

This is identical in its nefarious trickery to "voter repression" laws in many red states that require registered voters present levels of ID that are often hard to come by for the poor, minority, married or divorced women and so on… coincidentally often (lo and behold) democratic-leaning demographies. As a moderate, I am not opposed to increasing the demand that voters prove who they are! But if these laws weren't aimed solely and stealing elections for the GOP, these states would have accompanied these new regulations with measures aimed at helping their states to comply with the new burdens. States give such "compliance assistance" to major corporations, when new regulations apply to them. But apparently not one red cent to help the poor or young to GET the required ID, that would help them in so many other aspects of life. Not… one… red… cent.

This is what the once honorable and intellectual movement of Goldwater and Buckley is down to. Cheating, cheating cheating and more cheating.

Alfred Differ:

Do the real super-villains see themselves as evil, though? Look through the best comics again and I think you'll see the ones with developed characters never do.

I hope we're not really arguing here, but I'm not sure I've made myself clear.

Yes, of course the best characters don't think of themselves as evil. If you look back up at my first post on this subject, my brother and I were making fun of some dialogue by the Red Skull for exactly that reason: it seemed unrealistic to us teenagers that even a Nazi comic book villain would talk about himself in such a manner.

So I'm in total agreement with you there when I say that I'm mystified by the speeches that Nigerian kidnapper guy is giving on tv, which sounds to my ear like bad comic book villain dialogue. Sadly, I don't have to wonder why he kidnapped hundreds of girls to sell on the black market, but I do have to wonder why he wants to go on tv and make speeches like Snidely Whiplash.

Actually, Dr. Brin, Luke's rise in the rebel command structure can be attributed to two things; cronyism and a blind love of the past. He's the "best friend" of Leia, a valid higher up in the Rebellion, and he's a Jedi, which the Rebellion considers to be a vital symbol.

I see his "leadership" role as symbolic and illusionary. He's a PR piece that fights. That's all.

LarryHart:

We aren't arguing about this stuff. I agree with you that this sounds like bad comic book dialogue. What I'm pointing out, though, is that the guy who went on TV doesn't see himself as Snidely Whiplash. It only comes across as bad dialogue to us because we are trained to hear it as such. He probably isn't.

In the last episode of James Burke's 'The Day the Universe Changed' he explained how someone in the past can think it is good to burn witches and how we 'in modern times' can think quite the opposite. It's a powerful lesson in perception and I think that is what is going on here.

Well… Robert… don't forget Luke avenged Alderan by blowing up the Death Star. That plus being a Jedi and paling with Leie should give him at least a dozen x wings to command. But logic is moot. Lucas DOES make him a high rebel officer! And hence he would have used my Plan A. If anyone had two neurons to rub together.

Again Alfred, the top nazis had no trouble calling themselves evil barbarians.

Oh, don't get me wrong. I agree with you about the idiocy of his being on the Rebel command structure. I was puzzled by it in the movie while a kid - it was like it was tacked on all at once and never explained even in passing.

I'm just saying that if he was a PR-style officer, then he didn't have any power over hardware. There may have also been concerns over "squandering Rebel resources" to rescue one smuggler whose call to fame was coming back at the last second to help Luke (and why the hell did the Death Star NOT blow a freighter out of the sky when it was on an approach vector during a major battle? Outside of Lucas' need for a "cavalry to the rescue" moment?) and saving a princess... but also drawing bounty hunters down on their position!

So yeah. In all likelihood there were only a couple people in the Rebellion interested in springing Han - Leia, Luke, and whatshisname from Cloud City. (And the Wookie.) I have to wonder if the print adaptation bothered going into any further detail, but it's been ages since I read the book and I'm not sure it's worth trying to find a copy.

Rob H.

Salon concern-trolls us over London police officers wearing video cameras to record all their interactions. http://www.salon.com/2014/05/08/big_brother_is_watching_you_london_cops_test_wearing_cameras_on_their_uniforms/

From the lede- "Advocates tout its role in police accountability and evidence collection. But it comes at the expense of privacy" Like anyone has an expectation of privacy when speaking to an officer?

matthew: strongly disagree. Video cameras are an utterly different species than conversing with someone who has let's assume equal memory and perhaps also a notebook. The video can be, ah, "pushed, filed, stamped, indexed, briefed, debriefed, and numbered," if you catch my drift, and any citizen of sound mind should be well within their rights to refuse correspondence with such a wired Gargoyle, to the use the fully negative Snow Crash sense of that term. Bruce Schneier has said more about pervasive CCTV—deliciously Orwellian, I believe at least one "we're watching you ad" has appeared; a few walking video cameras plus all that supporting infrastructure would be money and doubtless no little Carbon spent for Britain now pays dearly to cover their coal, gas, and oil imports wasted when all that money could instead be spent on, say, improving officer pay, or etc.

Heh. I have no issue refering to Americans as barbarians either and I'm one of them, but I'll leave of 'evil' as a descriptor.

Very well. I went and read more of the security briefings regarding Boko Haram to get the background material and I no longer think this is a perception issue. I'm pretty sure the guy on the TV intended the bad comic book dialogue. There is a leadership split within Boko Haram and that could be expressing itself as a contest for who is the baddest among them. Western indigant response might be the measure.

The reason there is a split is the last leader got killed (extrajudicial) a few years ago. I suspect much the same will happen again when the baddest is determined. Someone is trying to be the best possible target and thinks he can do what his predecessor couldn't.

onward

Responding to Alfred Differ beneath the fold here...

Alfred Differ:

In the last episode of James Burke's 'The Day the Universe Changed' he explained how someone in the past can think it is good to burn witches and how we 'in modern times' can think quite the opposite.

There's a new tv series called "Salem" which is a kind of nighttime teenage soap opera set in colonial Salem. I don't watch that sort of thing, but from the ads I've seen, it seems to be subtly promoting the meme of rehabiliting the Salem with trials--taking the point of view that the with hunters had a good point. It reminds me of Ann Coulter's attempts at rehabilitating the character of Joe McCarthy.

onward

Post a Comment